Understanding Blob Storage

When I was building RoomCraft AI, I needed somewhere to store all the AI-generated room images. Users upload a photo of their room, and the app generates 3 redesigned versions. That's a lot of images piling up fast.

I started looking at options. Cloudinary, AWS S3, Google Cloud Storage. Cloudinary seemed easy but got expensive at scale. S3 was the industry standard but egress fees add up quick when users keep viewing their generated designs. That's when I found Cloudflare R2.

R2 is S3-compatible (so all the same APIs work) and has zero egress fees. With S3, every time a user downloads an image, you pay. With R2, you don't. For an app like RoomCraft where users constantly view their generated designs, this saved me a ton.

This experience taught me how critical blob storage is in modern system design. So let me break it down.

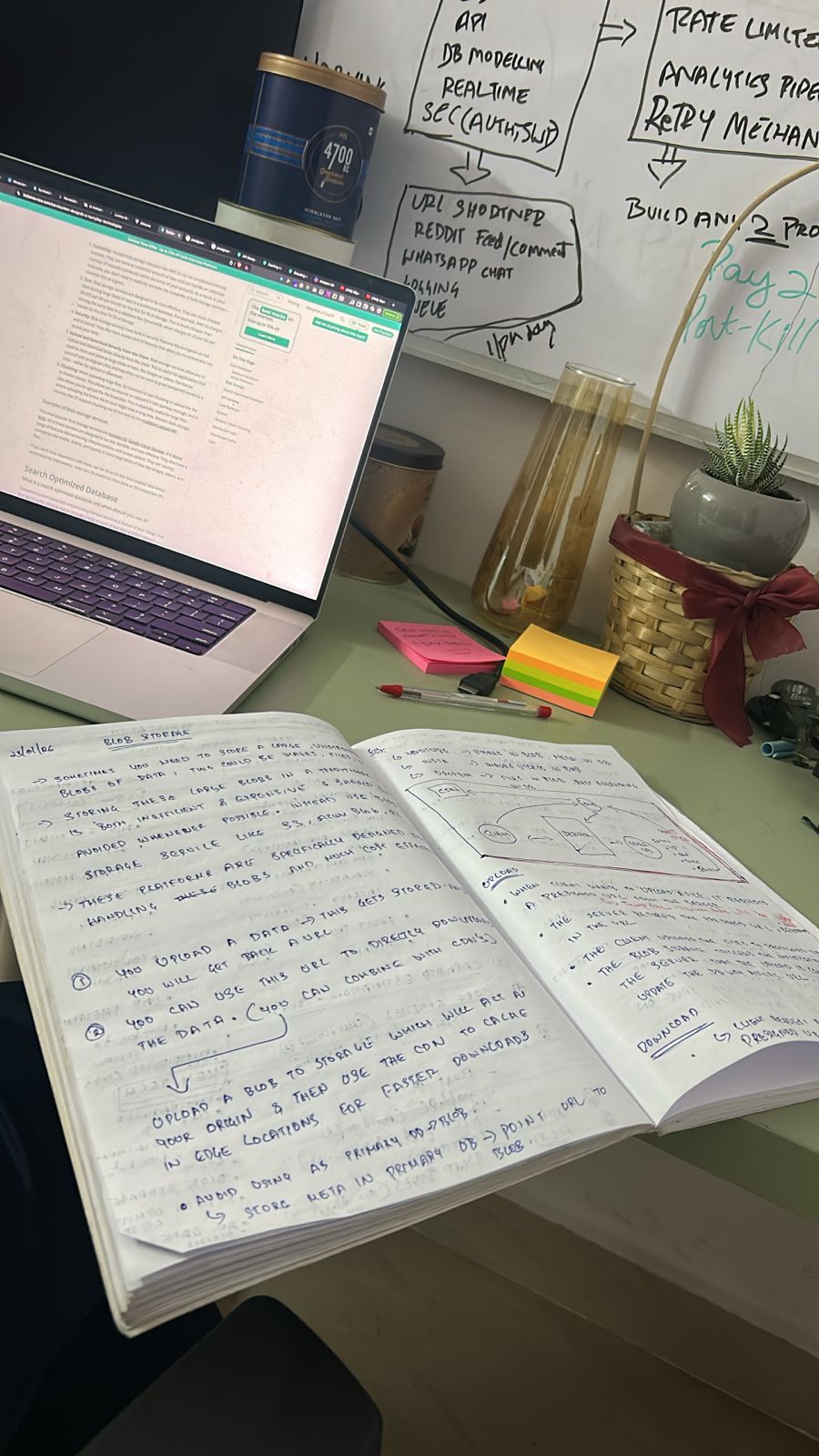

Click to enlarge

From my notes while learning about blob storage architecture

Click to enlarge

From my notes while learning about blob storage architecture

What is Blob Storage?

In modern system design, we often need to store massive amounts of unstructured data. Whether it's high-definition videos, user profile pictures, or raw log files, a traditional database isn't the right tool for the job.

Storing large blobs (Binary Large Objects) in a traditional database is inefficient, expensive, and should be avoided whenever possible. Instead, we use dedicated Blob Storage services like AWS S3, Azure Blob Storage, or Cloudflare R2.

Why Cloudflare R2?

Before diving into the architecture, let me tell you why I chose R2 for RoomCraft:

- Zero egress fees: S3 charges you every time someone downloads a file. R2 doesn't. For image-heavy apps, this is huge.

- S3-compatible API: Drop-in replacement. I didn't have to learn anything new.

- Built-in CDN: Cloudflare's edge network means images load fast globally.

- Simple pricing: Just pay for storage. No surprise bills.

For side projects and startups watching their budget, R2 is a no-brainer. Blob storage is designed specifically for handling large-scale unstructured data in a cost-effective and highly available way. But don't just take my word for it. Look at who else is using it.

Who Uses Blob Storage?

Everyone. Netflix stores its entire video library in blob storage. Spotify keeps audio files there. Slack stores every file you've ever uploaded. The pattern is the same: large files go in blob storage, metadata (titles, timestamps, permissions) stays in a traditional database.

Key Features and Benefits

- Durable: They are incredibly durable. Techniques like replication ensure your data is safe even if a data center fails.

- Scalability: Services like S3 are virtually unlimited. You can scale from 1 GB to 1 PB without changing your architecture.

- Cost: It is significantly cheaper to store files here compared to traditional database storage.

- Security: Most platforms offer built-in encryption and fine-grained access control through IAM policies.

- Direct Upload/Download: You can bypass your server and have the client talk directly to storage, saving you bandwidth and CPU.

When designing a system that uses Blob storage, you shouldn't just "dump" everything there. You need a split-data approach:

- Blob Storage: Stores the actual large data file.

- Primary Database: Stores "Metadata" (Entity ID, Name, Description) and a URL that points to the object in Blob storage.

Sequence Diagram

This diagram illustrates the relationship between the Client, your App Server, the Blob Storage (S3), and the CDN.

Database Schema (Metadata)

The database shouldn't hold the file itself, but rather an Entity containing:

- ID: Unique identifier for the record.

- Name: The display name of the file.

- Desc: A brief description of the content.

- BURL: The pointer/URL to the file in the Blob storage.

High-Level Workflow

- Upload: The client uploads data and receives a URL.

- Download: The client uses that URL to download the data directly.

- Optimization: To speed things up, you can use a CDN (Content Delivery Network) to cache the data at "edge locations" closer to the user.

Efficient Uploads and Downloads

To keep the server from becoming a bottleneck, we use Presigned URLs.

The Upload Process

- Request: When a client wants to upload, it requests a Presigned URL (a temporary, secure link) from the server.

- Response: The server returns the URL and records the intent in the database.

- Direct Upload: The client uploads the file directly to the Blob storage via the Presigned URL.

- Notification: Once complete, the Blob storage triggers a notification to the server, which then updates the database with the "Actual URL."

The Download Process

- The client requests a file; the server returns a Presigned URL.

- The client uses that URL to download the file via a CDN, which proxies the request to the underlying Blob storage.

Advanced Technique: Chunking & Multipart Uploads

When dealing with massive files, uploading the entire file in one go is risky. If the connection drops at 99%, you have to start over.

Chunking solves this by breaking the file into smaller pieces.

- Resumability: If an upload fails, you only need to re-upload the specific "chunk" that failed.

- Parallelism: You can upload multiple chunks at the same time, significantly speeding up the process.

- Multipart Upload API: Most S3-compatible platforms provide a specific API for this.

Pro Tip: When using the Multipart Upload API, the client splits the file into chunks (e.g., 5MB each). This allows for Parallel Uploads, which is essential for large video files. If the user loses their internet connection, the system only needs to retry the specific chunks that weren't finished, rather than the entire multi-gigabyte file.

TL;DR

Keep it simple. Files go in blob storage. Metadata goes in your database. Point one to the other.

Avoid using blob storage as a primary DB for structured data. Instead, store metadata in a primary DB and point to the URL in blob storage. This ensures your system remains performant, cost-effective, and infinitely scalable.

Used LLMs to correct grammar, typos etc